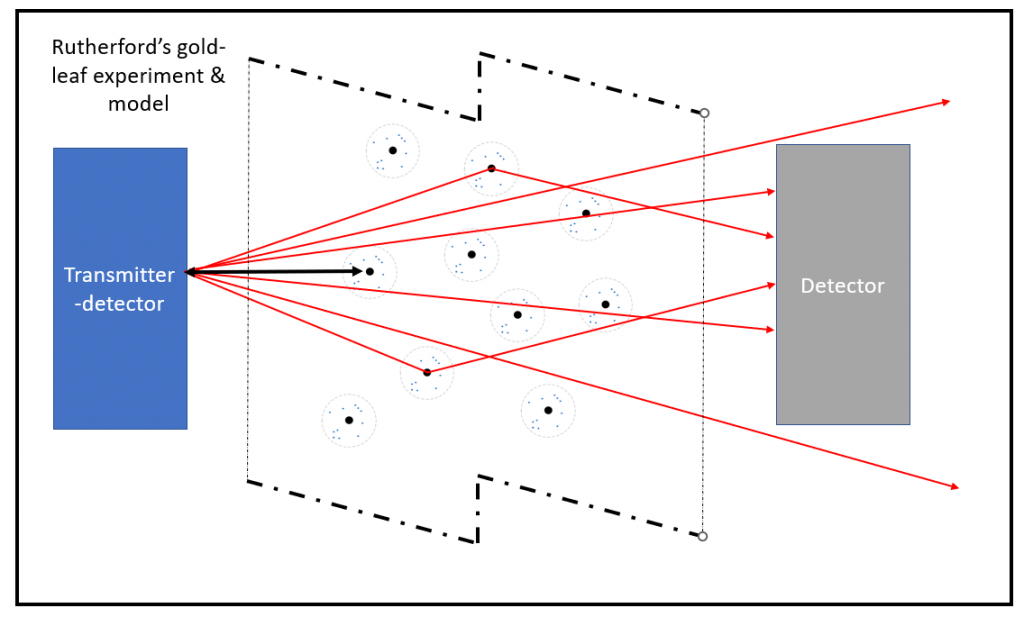

In 1911 the New Zealand Physicist Ernest Rutherford fired a stream of particles (1) at a gold leaf foil with the aim of better understanding the nature and structure of the atomic nucleus (Figure 1). The details are unimportant in this context, but one can think of it in abstract terms as kicking a football at a goal with a net full of holes in front of a layer of invisible concrete bollards (2). To his surprise or maybe not he found that while most of the particles went straight through the foil, a few came straight back and a few got deflected.

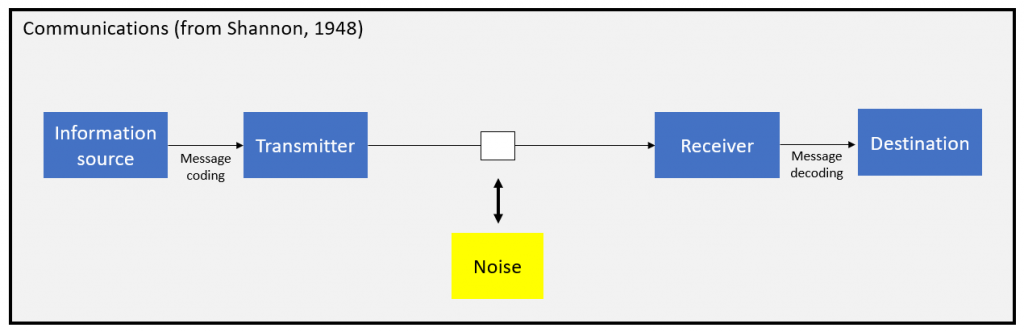

In 1948 Claude Shannon published the first version of his iconic paper A Mathematical Theory of Communication (3) about how communications worked, with coders, decoders and a variable amount of noise (4) introduced within the channel pathway (Figure 2). His paper and others paved the way for modern digital communications (5).

With this in mind, one can frame and usefully apply Rutherford’s and Shannon’s concepts in the normal practice of teaching, learning and Public Relations:

Anything one says or writes or broadcasts (6) is a signal. A change. A delta. A ‘message’ is a series of signals arranged in a particular way. So one can consider the exercise in a Rutherfordian and Shannonian way in the context of predicting and analysing transmission and response dynamics (7). I have used the term ‘signal’ here but ‘message’ or ‘press release’ [generally] work as well. And I have used the term ‘sample’ in recognition of the Rutherfordian scientific principle, though ‘class’ and ‘audience’ and ‘customer’ are [generally] also relevant. So with apologies in advance:

- Did the signal go straight through the target sample? Can this be measured? How?

- Was the signal fully absorbed by the sample with no measurable reflective harmonic or after-effect? How can this be tested?

- Was the signal attenuated, blocked, deflected or otherwise distorted before it got to the sample?

- Is there a detectable store-and-forward type memory and relay function in the sample? What are the timing and other dynamics?

- Is there an active element of signal amplification? If so what level of amplification, distortion, noise has been introduced?

- Is the reflection and/or onward transmission an exact replication and mirror image? What has been added? What has been removed? What has been enhanced?

- Alternatively what fragment of the signal was reflected or on-transmitted? Why this fragment and not others?

- What impact has the initial signalling and subsequent response(s) had on the transmitter itself?

- What would happen if the signal and content was generally or specifically publicly available? (see first bullet).

Thus using this reductionist1′ approach the information communications process can be thought of and modelled as an electronic or biological circuit comprising whatever media and active and passive circuit componentry that is available for the purpose of information transfer and actuation. As a corollary there is no such thing as ‘bad’ information on the feedback loop pathway. There is only ‘feedback’. This helps sometimes in removing the noise element in the feedback signal commonly described as ’emotion’ (8). Further, considering all signals (messages, classes, press releases) as test signals then, like a writer of financial options one can be [reasonably] indifferent to the feedback. It is a result. It is data. Update the model. Or update the signal. Or do nothing. It is also worth brushing up on Newton’s laws of motion in this respect. Action, reaction and momentum. And to expand on this, sometimes the non-signal paradigm, and its complement the non-response pattern, are the most powerful signal-not-signals. In PR. In politics. In science discovery.

This experimental paradigm also links to the Efficient Market Hypothesis (EMH) for asset pricing. EMH posits various scenarios based on information availability and how that information is disseminated, processed and thence discounted into an asset price. EMH is however incomplete, both in its axioms and assumptions and in the model scenarios it posits. Burton Malkeil (9 – A Random Walk Down Wall Street) provides an excellent commentary on the dilemmas and quandaries present in EMH.

We can also think of social media and human communications in general as a nodal clustered network of links and message activity, as set out in figures 1 and 2. I though prefer the term ‘mesh’ to ‘network’ and the term ‘filament’ to ‘link’ as to my mind they better describe the parallel multi-threading between nodes (10). And in the context of current newsflow the nodes could be machine-based or human-based or community-based or a hybrid. The difference being that as soon as one A(G)I (11) or machine node or GPU or cluster learns something (12) then they all potentially have, because technology interface protocols enable the possibility of instantaneous frictionless information transfer.

It is not too much of an extension here to consider machine nodes as providing the opportunity to supplement one’s own neocortex with a so-called ‘exocortex’ (13); onboard memory and processing supported with offboard memory and processing. Interestingly though, whilst the offboard part is faster and bigger, and does not get tired, it is materially less energy-efficient than a human brain that requires just around 20 Watts to generate its beautiful creativity and functions. The human brain is very eco-friendly. And the exocortex comes with the obvious problems Freud described with respect to the interplay between, and sometimes the disassociation of, the ego and the id and the superego (14). Modelling and testing such network effects requires both extreme computing power and machine-machine learning.

So what has all this got to do with Public Relations? Firstly because PR is a fun and important job. We are all our own PR agents. And because the current unfolding events mean that we need good PR on the part of the tech corporations, governments and others to help advance the public understanding of the nature of cybernetics and its boundless potential and risks.

Finally for now, I really like Marshall McLuhan’s quote: “The medium is the message” (15 – McCluhan, 1964) even though I do not really understand it. Or because I do not really understand it.

If this short note resonates with you then please do connect with me via @philiplambert .

References and notes

- For this experiment Rutherford used alpha particles. These are atomic particles that comprise two neutrons and two protons. They can be thought of as a helium atom stripped of its electrons. Alpha particles can be produced when larger unstable atoms such as plutonium and uranium decay into more stable isotopes. Alpha particles will generally find and gain a couple of electrons to form a stable atom of the gas helium.

- This is a model intended to help understanding. By definition all models are wrong (though some are more useful than others). See eg Box, G, 1919 – 2013.

- LCCN 49-119922. This is actually the version published in 1949 after review and edits from Warren Weaver. The primary edit was to change “A” to “The” in the paper’s title. This may not have been an accurate or useful update.

- “Noise” is an interesting concept. Pure noise is a mathematical construct and as such does not exist physically even though the noise distortion (sic) level may be below the resolution and detection levels of the receiver. Or it may simply be pre-filtered out. Further, noise is receiver-dependent and subject to contextual and dynamic false-negatives and false-positives. Noise is path and receiver dependent.

- The implications of digitisation and quantisation are not generally grasped. Digitisation enables error detection and correction to whatever engineering specification is appropriate, affordable or required. Quantisation enables resolution and precision. In practice error control is complete and resolution is total4′. The processed signal is good enough, even if the signal is mathematically and statistically not perfect. And this links to Maxwell’s lovely equations (and Faraday’s experiments) which inspired Einstein. An interesting question is whether the input (or output) signalling is above or below the current or potential sampling rate and processing speed of the processor? Do the signals sometimes pop above or below the signal detection threshold? Does this appear to be stochastic or periodic? Or pseudo-stochastic? Or pseudo-periodic? Clock speeds and relative clock speeds are important.

- Or thinks, if one is a neuronal scientist as I am.

- Based on the signal response envelope (aka what people think and say and do) and how this differs from the model-predicted structure what does this imply for model-update and/or signal-update? Of course transforming the envelope into other domains such as frequency helps analyse the situation.

- Emotion can be a valuable signal but it can also be unwanted noise. Which ever it is depends on the context, the receiver and the transmitter.

- Malkiel, B.G., 1990. A random walk down Wall Street, 5th ed. ed. Norton, New York.

- The concept of a node requires some thought. Is it a source or a sink? It is and can be both. How does it originate? How does it develop and evolve? This is at once a philosophical and a mathematical question. Do the links or the nodes come first? Is the node quantised and digital? Or is it analogue? Or both at the same time? Each node can be considered a black box but if the node is opened up to successive levels of detail (the white-box technique) what does one find? What does one find at the [mathematical] limit? (See site home page). And therefore in this respect the concept and practicality of insulation and non-interference from noise is critical for information processors, whatever they may be. Classical or quantum computers. Or (quantum) brains. Interference collapses the wave pattern, or at least depletes the signal. Of course. So a question here for philosophers and electrical & electronic engineers: Does the signal ever die? Or can it always be recalled? Mathematicians and physicists can catch up later on this thought-idea.

- The terms Artificial Intelligence and Artificial General Intelligence are poor terms as neither can be defined (I challenge you to do so). But we are stuck with them. I prefer the term machine learning as it can be defined, measured and tested. Though maybe Norbert Weiner was closer to things with his term Cybernetics (Wiener, 1948) as that deals solely with communications and systems control. Useful robots with great ids but zero ego or super ego. In this context the interested reader might also want to skim God & Golem Inc (1964, ISBN 978-0-262-23009-4) by the same author in which he explores, albeit inaccurately, the wider implications of machine learning and human society. See endnote 17.

- That learning could be be a subjective truth or an objective truth or an experimentally demonstrable false-hood. Or an individual’s own subjective worldview. Remembering that experimentally nothing can be proved to be ‘True’, it can only be shown to be ‘False’, to an estimated level of statistical probability. And visa versa. Of course mathematically there are ‘Truths’ that can be logically demonstrated and thence modelled, calibrated and measured. Though see note 16.

- This is not my term and is arguably a horrible and inadequate word, but as a meme (see Dawkins, 1976) it is achieving some current amplification and velocity.

- And how does one connect a carbon-based ego and super-ego with a silicon-based id in the first place? Screenshots?2′

- “McCluhan, Marshall, Understanding Media: The Extension of Man (1964).” Columbia University Press.

- We have a ‘universal’ mathematics. But would this mathematics be the same ‘everywhere’ for example in other quantum realities? An interesting philosophical quandary for mathematicians and physicists to debate.

- For those who have reached this far and both like reading end-note details and speculations, the dynamics of the communication process and open or closed system sub-parts can also be considered in the context of the Second Law of Thermodynamics and information (entropy and its beautiful complement negentropy3′) and time and space and matter. Shannon left out the physics, possibly for understandable and sensible reasons. His math was pretty good though.

- Graphic images reproduced with permission from Frances Nutt Design (http://francesnutt.com/). ‘Communications’ and ‘Rutherford’ produced by the author using Microsoft® PowerPoint.

1′ I use reductionism here simply as one tool in the thinking toolkit. Life (the overarching subject of this site) is holistic and emergent (See point 10). The German word gestalt nicely encapsulates this whole-is-greater-then-sum-of-the-parts concept.

2′ The world’s richest person, currently, Elon Musk, and a host of universities and commercial entities around the globe have that one in hand via development of brain-computer-interface (BCI) capabilities.

3′ See Schrödinger (1944), though he used the term “negative entropy”. It was others who concatenated the term to negentropy and developed the mathematical aspects, namely that negentropy is a measure of the distance from the Gaussian, across relevant orthogonal or non-orthogonal informational axes.

4′ Of course Fourier got there first by working out that any periodic signal of any length (-∞ to +∞ ) could be represented as a series of weighted sine or cosine functions, so long as the sampling rate is at least twice the highest frequency of the highest component in the waveform (see also Nyquist, Shannon and Wiener). And one has to spend some time thinking about how to work out what this highest frequency is, and one can get a bit stuck sometimes when one gets to the Planck frequencies for time and space. And when one adds aperiodicity and phase shifting and frequency shifting and denormalization, this gets tricky to code and decode. And one can always add additional signal forms on top. And/or subtract signals or bits of signals. But one can go up and down plenty rabbit holes here when one wonders about the ontological and epistemological meanings of aperiodicity and periodicity. They are the same really, it is a question of scale, normalisation and re-denormalisation. The music will be great though. The music of the multiverse.